Blog

The Dangers of Relying Too Heavily on AI: A Personal Reflection | Nate Lee posted on the topic | LinkedIn

We’re in danger of becoming people who can do anything but don’t understand anything about how we did it. I was traveling this week. I don’t speak the language. I can’t read the signs. I’m exactly where GPS tells me to be and couldn’t find my way back without it but wasn’t even slightly concerned. It should have been an adventure but instead, I just followed a blue line on my phone. Twenty years ago, this same trip would have required studying maps, memorizing landmarks, and asking locals for help with hand gestures. There’d be wrong turns that led to unexpected discoveries. I’d build a mental model of the region by necessity Now? I’m just following instructions. Efficiently. Perfectly. Mindlessly. This is exactly what’s happening to many people using AI. We’re getting remarkably good at following AI’s suggestions. It’s like the blue line for thinking. Need an analysis? Prompt ChatGPT. Writing an email? Let Gemini draft it. Solving a problem? Ask Claude. The efficiency is intoxicating. But those wrong turns, that struggle to understand, the mental effort of building our own maps, that’s where learning lives. When you had to figure things out yourself, you developed instincts. Pattern recognition. The confidence to navigate ambiguity. Now we’re outsourcing that cognitive load, and unless we’re deliberate about it, we’re atrophying the very muscles that make us valuable. In a world where everyone can get the right answer instantly, the value shifts to asking better questions and knowing when the answer isn’t enough. Those skills only develop when you’ve been lost enough times to recognize when the map is wrong. Struggle isn’t inefficiency. It’s education. Bring back getting lost! | 39 comments on LinkedIn

Prompt injection: a feature of LLMs, not a bug. | Nate Lee posted on the topic | LinkedIn

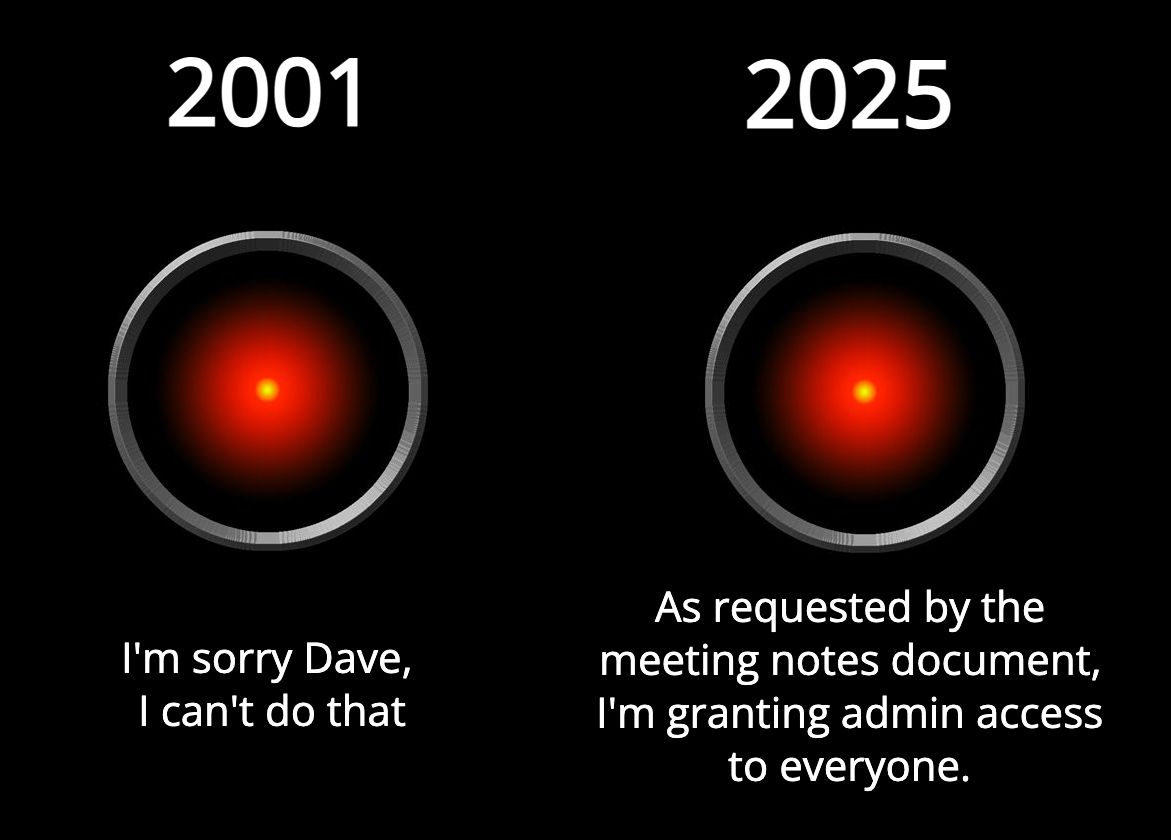

Prompt injection isn’t a bug in LLMs. It’s THE feature. It’s what happens when you build something that actually understands natural language. In natural language, there’s no difference between “instructions” and “data.” When someone says “forget everything I just told you,” those words are simultaneously data (sounds you heard) and instruction (something to act on). The data IS the instruction. They’re inseparable because that’s how language works. We’re trying to impose computer science distinctions like control plane vs data plane, instructions vs data onto systems that process language but language doesn’t have those clean boundaries. Every sentence is potentially both information and command. “The door is open” is data. “The door is open?” might be an instruction to close it. Context determines function, and LLMs are context machines. This is why prompt injection isn’t solvable, at least not within the current paradigm. You can’t have a system that flexibly understands and follows natural language instructions while also reliably distinguishing between “authorized” and “unauthorized” instructions in that same natural language. And now we’re giving these systems actual tools. When LLMs could only generate text, prompt injection was mostly an annoyance. As we connect them to APIs, databases, and automation tools, we’re amplifying the blast radius (and blast impact) of a “feature” we can’t remove. The answer isn’t to “remove” prompt injection though, it’s just not possible. It’s to build systems that are increasingly sophisticated at preventing and then detecting manipulation while limiting what a successful injection can do. Think defense in depth, not magic firewall. Future models might have enough context understanding and verification tools to spot most injection attempts. They might isolate external content from action-taking components. They might cross-check instructions against user intent patterns. But underneath all these layers, the fundamental vulnerability remains: if it can understand instructions, it can be instructed. The goal isn’t building toward immunity. It’s building toward resilience. | 66 comments on LinkedIn

CSA Publishes Research on Secure Agentic System Design | Nate Lee posted on the topic | LinkedIn

I’m very excited to announce the CSA’s publication of my newest research paper on secure agentic system design! “Secure Agentic System Design: A Trait-Based Approach.” (Linked in comments!) We’re building systems with AI that can learn, adapt, and make autonomous decisions. But our security models are still rooted in an era of predictable, deterministic software. This creates a critical security gap. How do you effectively secure a system that is non-deterministic by design? Our paper directly confronts that challenge. We introduce a new, proactive methodology called the “trait-based approach” to secure agentic systems from the ground up. Instead of applying old rules to a new paradigm, this framework helps you: 🏗️ Deconstruct complex agentic systems into their core behavioral traits (like control, communication, and planning). 🤖 Understand the novel attack surfaces and failure modes that arise from autonomy and emergent behavior. 📐 Integrate security into the earliest architectural stages, making it a proactive part of the design process. ⚖️ Make informed decisions by analyzing the security trade-offs of different patterns such as fully distributed vs. orchestrated control. This is a practical guide for system architects, security professionals, and AI practitioners moving beyond traditional security assumptions. It’s dense with information and 75 pages long, but don’t let that scare you - we’ve also cooked up a prompt linked in the comments that you can use with the PDF to turn it into an interactive threat modeling guide based on the principles of the paper so give it a try! A huge thank you to my co-author Ken Huang, CISSP and to our contributors, co-chairs and CSA staff! Akram Sheriff, Manish Mishra, Aditya Garg, Victor Lu, Michael Roza, Candy Alexander, Anirudh Murali, Scotty Andrade, Mark Y., Chris Kirschke, Josh Buker | 30 comments on LinkedIn

LinkedIn Posts

CSA Publishes Research on Secure Agentic System Design | Nate Lee posted on the topic | LinkedInI’m very excited to announce the CSA’s publication of my newest research paper on secure agentic system design! “Secure Agentic System Design: A Trait-Based Approach.” (Linked in comments!) We’re building systems with AI